Record🤔

cid:

"bafyreifde3xdd3qodruw22mer3yhnwesxajkdfpwmxpkjz6jyfzw55tica"

value:

text:

"Finally created an official policy for AI/LLMs in class (compaf24.classes.andrewheiss.com/syllabus.htm...). I've been radicalized by this new article arguing that ChatGPT and friends are philosophical bullshit machines: doi.org/10.1007/s106..."

$type:

"app.bsky.feed.post"

embed:

$type:

"app.bsky.embed.images"

images:

alt:

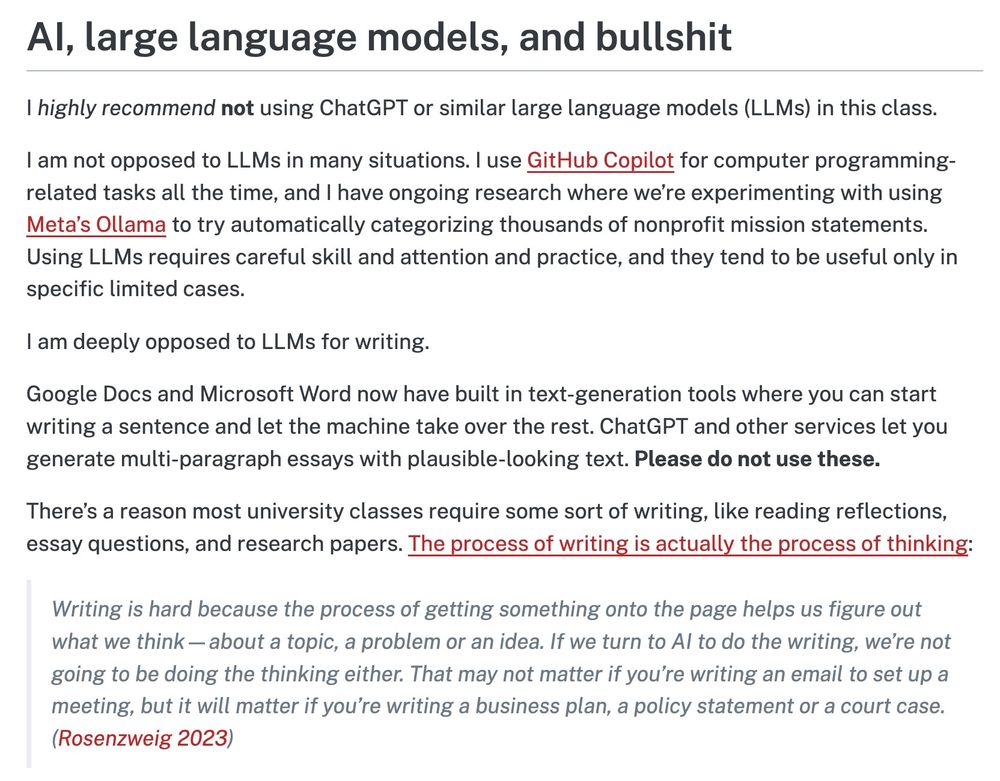

"(See link for full text) AI, large language models, and bullshit I highly recommend not using ChatGPT or similar large language models (LLMs) in this class. I am not opposed to LLMs in many situations. I use GitHub Copilot for computer programming-related tasks all the time, and I have ongoing research where we’re experimenting with using Meta’s Ollama to try automatically categorizing thousands of nonprofit mission statements. Using LLMs requires careful skill and attention and practice, and they tend to be useful only in specific limited cases. I am deeply opposed to LLMs for writing. Google Docs and Microsoft Word now have built in text-generation tools where you can start writing a sentence and let the machine take over the rest. ChatGPT and other services let you generate multi-paragraph essays with plausible-looking text. Please do not use these."

image:

View blob content

$type:

"blob"

mimeType:

"image/jpeg"

size:

390289

aspectRatio:

width:

1664

height:

1300

alt:

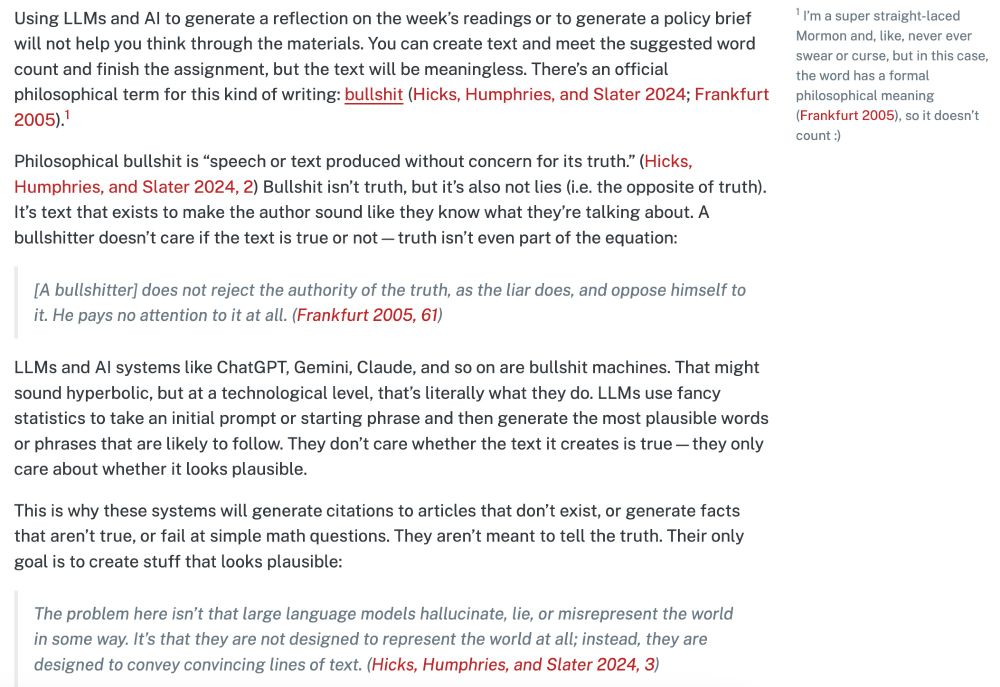

"(See link for full text) Using LLMs and AI to generate a reflection on the week’s readings or to generate a policy brief will not help you think through the materials. You can create text and meet the suggested word count and finish the assignment, but the text will be meaningless. There’s an official philosophical term for this kind of writing: bullshit (Hicks, Humphries, and Slater 2024; Frankfurt 2005).1 1 I’m a super straight-laced Mormon and, like, never ever swear or curse, but in this case, the word has a formal philosophical meaning (Frankfurt 2005), so it doesn’t count :) Philosophical bullshit is “speech or text produced without concern for its truth.” (Hicks, Humphries, and Slater 2024, 2) Bullshit isn’t truth, but it’s also not lies (i.e. the opposite of truth). It’s text that exists to make the author sound like they know what they’re talking about. A bullshitter doesn’t care if the text is true or not—truth isn’t even part of the equation:"

image:

View blob content

$type:

"blob"

mimeType:

"image/jpeg"

size:

448164

aspectRatio:

width:

2000

height:

1375

alt:

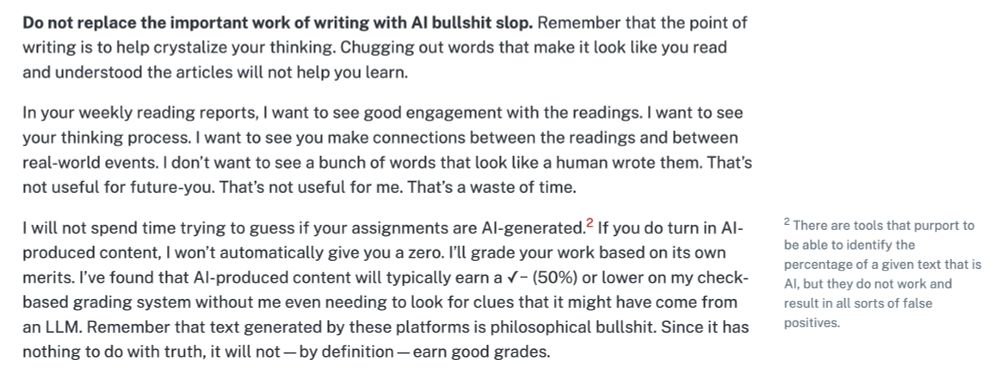

"(See link for full text) Do not replace the important work of writing with AI bullshit slop. Remember that the point of writing is to help crystalize your thinking. Chugging out words that make it look like you read and understood the articles will not help you learn. In your weekly reading reports, I want to see good engagement with the readings. I want to see your thinking process. I want to see you make connections between the readings and between real-world events. I don’t want to see a bunch of words that look like a human wrote them. That’s not useful for future-you. That’s not useful for me. That’s a waste of time. I will not spend time trying to guess if your assignments are AI-generated.2 If you do turn in AI-produced content, I won’t automatically give you a zero. I’ll grade your work based on its own merits. I’ve found that AI-produced content will typically earn a ✓− (50%) or lower on my check-based grading system without me even needing to look for clues"

image:

View blob content

$type:

"blob"

mimeType:

"image/jpeg"

size:

695635

aspectRatio:

width:

2000

height:

757

alt:

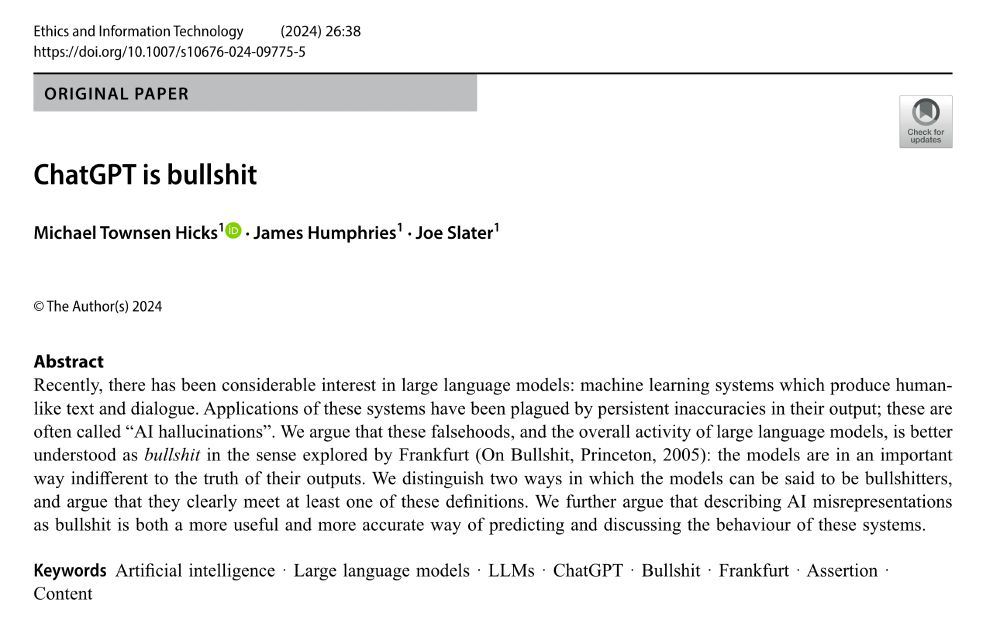

"ChatGPT is bullshit Michael Townsen Hicks, James Humphries & Joe Slater Abstract Recently, there has been considerable interest in large language models: machine learning systems which produce human-like text and dialogue. Applications of these systems have been plagued by persistent inaccuracies in their output; these are often called “AI hallucinations”. We argue that these falsehoods, and the overall activity of large language models, is better understood as bullshit in the sense explored by Frankfurt (On Bullshit, Princeton, 2005): the models are in an important way indifferent to the truth of their outputs. We distinguish two ways in which the models can be said to be bullshitters, and argue that they clearly meet at least one of these definitions. We further argue that describing AI misrepresentations as bullshit is both a more useful and more accurate way of predicting and discussing the behaviour of these systems."

image:

View blob content

$type:

"blob"

mimeType:

"image/jpeg"

size:

524557

aspectRatio:

width:

2000

height:

1254

langs:

"en"

facets:

index:

byteEnd:

105

byteStart:

57

features:

$type:

"app.bsky.richtext.facet#link"

index:

byteEnd:

244

byteStart:

221

features:

$type:

"app.bsky.richtext.facet#link"

createdAt:

"2024-08-19T18:11:15.921Z"